Spark-based anomaly detection over multi-source VMware performance data in real-time

Solaimani, M. ; Dept. of Comput. Sci., Univ. of Texas at Dallas, Richardson, TX, USA ; Iftekhar, M. ; Khan, L. ; Thuraisingham, B.

more authors

more authors

Anomaly detection refers to identifying the patterns in data that deviate from expected behavior. These non-conforming patterns are often termed as outliers, malwares, anomalies or exceptions in different application domains. This paper presents a novel, generic real-time distributed anomaly detection framework for multi-source stream data. As a case study, we have decided to detect anomaly for multi-source VMware-based cloud data center. The framework monitors VMware performance stream data (e.g., CPU load, memory usage, etc.) continuously. It collects these data simultaneously from all the VMwares connected to the network. It notifies the resource manager to reschedule its resources dynamically when it identifies any abnormal behavior of its collected data. We have used Apache Spark, a distributed framework for processing performance stream data and making prediction without any delay. Spark is chosen over a traditional distributed framework (e.g.,Hadoop and MapReduce, Mahout, etc.) that is not ideal for stream data processing. We have implemented a flat incremental clustering algorithm to model the benign characteristics in our distributed Spark based framework. We have compared the average processing latency of a tuple during clustering and prediction in Spark with Storm, another distributed framework for stream data processing. We experimentally find that Spark processes a tuple much quicker than Storm on average.

Published in:

Computational Intelligence in Cyber Security (CICS), 2014 IEEE Symposium onDate of Conference:

9-12 Dec. 2014Evaluating MapReduce frameworks for iterative Scientific Computing applications

http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=6903690&searchWithin%3Dhadoop%26refinements%3D4291944246%2C4291944822%26sortType%3Ddesc_p_Citation_Count%26ranges%3D2012_2015_p_Publication_Year%26queryText%3DSPARKJakovits, P. ; Inst. of Comput. Sci., Univ. of Tartu, Tartu, Estonia ; Srirama, S.N.

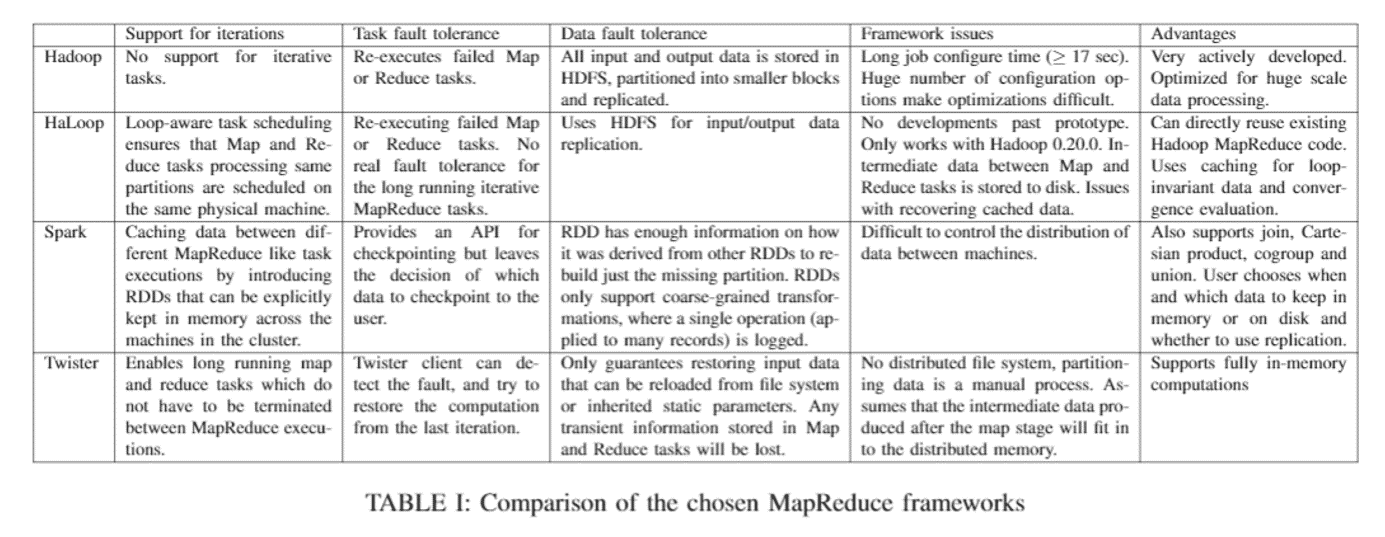

Scientific Computing deals with solving complex scientific problems by applying resource-hungry computer simulation and modeling tasks on-top of supercomputers, grids and clusters. Typical scientific computing applications can take months to create and debug when applying de facto parallelization solutions like Message Passing Interface (MPI), in which the bulk of the parallelization details have to be handled by the users. Frameworks based on the MapReduce model, like Hadoop, can greatly simplify creating distributed applications by handling most of the parallelization and fault recovery details automatically for the user. However, Hadoop is strictly designed for simple, embarrassingly parallel algorithms and is not suitable for complex and especially iterative algorithms often used in scientific computing. The goal of this work is to analyze alternative MapReduce frameworks to evaluate how well they suit for solving resource hungry scientific computing problems in comparison to the assumed worst (Hadoop MapReduce) and best case (MPI) implementations for iterative algorithms.

Published in:

High Performance Computing & Simulation (HPCS), 2014 International Conference onDate of Conference:

21-25 July 2014Understanding the behavior of in-memory computing workloads

http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=6983036&searchWithin%3Dhadoop%26refinements%3D4291944246%2C4291944822%26sortType%3Ddesc_p_Citation_Count%26ranges%3D2012_2015_p_Publication_Year%26queryText%3DSPARKTao Jiang ; SKL Comput. Archit., ICT, Beijing, China ; Qianlong Zhang ; Rui Hou ; Lin Chai

more authors

The increasing demands of big data applications have led researchers and practitioners to turn to in-memory computing to speed processing. For instance, the Apache Spark framework stores intermediate results in memory to deliver good performance on iterative machine learning and interactive data analysis tasks. To the best of our knowledge, though, little work has been done to understand Spark's architectural and microarchitectural behaviors. Furthermore, although conventional commodity processors have been well optimized for traditional desktops and HPC, their effectiveness for Spark workloads remains to be studied. To shed some light on the effectiveness of conventional generalpurpose processors on Spark workloads, we study their behavior in comparison to those of Hadoop, CloudSuite, SPEC CPU2006, TPC-C, and DesktopCloud. We evaluate the benchmarks on a 17-node Xeon cluster. Our performance results reveal that Spark workloads have significantly different characteristics from Hadoop and traditional HPC benchmarks. At the system level, Spark workloads have good memory bandwidth utilization (up to 50%), stable memory accesses, and high disk IO request frequency (200 per second). At the microarchitectural level, the cache and TLB are effective for Spark workloads, but the L2 cache miss rate is high. We hope this work yields insights for chip and datacenter system designers.

Published in:

Workload Characterization (IISWC), 2014 IEEE International Symposium onDate of Conference:

26-28 Oct. 2014A Big Data Architecture for Large Scale Security Monitoring

Marchal, S. ; SnT, Univ. of Luxembourg, Luxembourg, Luxembourg ; Xiuyan Jiang ; State, R. ; Engel, T.

Network traffic is a rich source of information for security monitoring. However the increasing volume of data to treat raises issues, rendering holistic analysis of network traffic difficult. In this paper we propose a solution to cope with the tremendous amount of data to analyse for security monitoring perspectives. We introduce an architecture dedicated to security monitoring of local enterprise networks. The application domain of such a system is mainly network intrusion detection and prevention, but can be used as well for forensic analysis. This architecture integrates two systems, one dedicated to scalable distributed data storage and management and the other dedicated to data exploitation. DNS data, NetFlow records, HTTP traffic and honeypot data are mined and correlated in a distributed system that leverages state of the art big data solution. Data correlation schemes are proposed and their performance are evaluated against several well-known big data framework including Hadoop and Spark.