原本用8GB的SD卡

但是覺得實在是太小了,於是買了

SanDisk Extreme microSD U3 16GB

http://24h.pchome.com.tw/prod/DGAG0H-A9005QU7O?q=/S/DGAG0H

把原本的系統燒錄成映像檔,燒到16GB的新卡之後居然顯示只有8GB!

用dd的指令搬過去也是一樣的狀況~

感謝實驗室的學弟找到了神奇的指令

sudo raspi-config

會進入下圖的畫面,選擇Expand Filesystem後reboot系統就會自動fit size囉!

2015年7月15日 星期三

2015年7月3日 星期五

[Linux] CentOS USB相關, 編輯檔案, 常用指令整理

搜尋USB

fdisk -l

登入USB (通常替USB取名為mnt)

mount /dev/sdb1/mnt

或是另一種方法

mount -t vfat /dev/sdb1 usb/

退出USB

umount /mnt

若是unmount無法順利退出,可以換個方法看看

sudo fuser -km /dev/sdb1

sudo umount /dev/sdb1

[root@master01 spark]# mkdir /mnt/usb

[root@master01 spark]# mount -v -t auto /dev/sdb1 /mnt/usb

mount: you didn't specify a filesystem type for /dev/sdb1

I will try type vfat

/dev/sdb1 on /mnt/usb type vfat (rw)

[root@master01 spark]# mount /dev/sdb1 /mnt/usb

mount: /dev/sdb1 already mounted or /mnt/usb busy

mount: according to mtab, /dev/sdb1 is already mounted on /mnt/usb

[root@master01 spark]# cd /mnt/usb/

[root@master01 usb]# ls

123.txt

[root@master01 usb]# cp 123.txt /mnt/usb /opt/spark/bin/examples/jverne/

快速檢查磁碟狀況

df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_master01-lv_root

50G 2.7G 44G 6% /

tmpfs 16G 0 16G 0% /dev/shm

/dev/sda2 477M 67M 385M 15% /boot

/dev/sda1 200M 260K 200M 1% /boot/efi

/dev/mapper/vg_master01-lv_home

378G 1.4G 357G 1% /home

/dev/sdb1 7.5G 5.2G 2.3G 70% /home/hduser/usb

/dev/sdc1 7.5G 5.2G 2.3G 70% /home/hduser/usb

vi 與 vim 的指令整理

http://www.vixual.net/blog/archives/234

壓縮和解壓縮的指令整理

http://www.centoscn.com/CentOS/help/2014/0613/3133.html

fdisk -l

登入USB (通常替USB取名為mnt)

mount /dev/sdb1/mnt

或是另一種方法

mount -t vfat /dev/sdb1 usb/

退出USB

umount /mnt

若是unmount無法順利退出,可以換個方法看看

sudo fuser -km /dev/sdb1

sudo umount /dev/sdb1

[root@master01 spark]# mkdir /mnt/usb

[root@master01 spark]# mount -v -t auto /dev/sdb1 /mnt/usb

mount: you didn't specify a filesystem type for /dev/sdb1

I will try type vfat

/dev/sdb1 on /mnt/usb type vfat (rw)

[root@master01 spark]# mount /dev/sdb1 /mnt/usb

mount: /dev/sdb1 already mounted or /mnt/usb busy

mount: according to mtab, /dev/sdb1 is already mounted on /mnt/usb

[root@master01 spark]# cd /mnt/usb/

[root@master01 usb]# ls

123.txt

[root@master01 usb]# cp 123.txt /mnt/usb /opt/spark/bin/examples/jverne/

快速檢查磁碟狀況

df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_master01-lv_root

50G 2.7G 44G 6% /

tmpfs 16G 0 16G 0% /dev/shm

/dev/sda2 477M 67M 385M 15% /boot

/dev/sda1 200M 260K 200M 1% /boot/efi

/dev/mapper/vg_master01-lv_home

378G 1.4G 357G 1% /home

/dev/sdb1 7.5G 5.2G 2.3G 70% /home/hduser/usb

/dev/sdc1 7.5G 5.2G 2.3G 70% /home/hduser/usb

vi 與 vim 的指令整理

http://www.vixual.net/blog/archives/234

壓縮和解壓縮的指令整理

http://www.centoscn.com/CentOS/help/2014/0613/3133.html

強制關機

shutdown -f

現在關機

shutdown -h now

shutdown -f

現在關機

shutdown -h now

2015年7月2日 星期四

[Hadoop] 用hadoop-daemon.sh啟動Hadoop

最近要測master不參與運算的效能

從slave名單拿掉master後,卻讓整個hadoop都不能運作了

去社群上詢問之後,得到建議用hadoop-daemon.sh start的方法

(我本來都直接用start-all.sh)

雖然要個別開啟,輸入的指令多了一些,但是至少克服了上述的問題

首先在master輸入以下指令,個別啟動

hadoop-daemon.sh start namenode

hadoop-daemon.sh start secondarynamenode

yarn-daemon.sh start nodemanager

yarn-daemon.sh start resourcemanager

檢查一下~

[hduser@master01 ~]$ jps

22550 NameNode

22818 SecondaryNameNode

9958 Master

23420 Jps

23027 NodeManager

23187 ResourceManager

21980 RunJar

接著到要開啟的slave啟動datanode

hadoop-daemon.sh start datanode

檢查一下~

[hduser@slave02 ~]$ jps

11274 Jps

11212 DataNode

4361 Worker

[補充] 在Banana Pi or Raspberry Pi上的路徑不太一樣,要先到hadoop資料夾再啟動

[補充] 在Banana Pi or Raspberry Pi上的路徑不太一樣,要先到hadoop資料夾再啟動

hduser@banana01 ~ $ hadoop-daemon.sh start datanode

-bash: hadoop-daemon.sh:命令找不到

hduser@banana01 ~ $ cd /opt/hadoop/

hduser@banana01 /opt/hadoop $ sbin/hadoop-daemon.sh start datanode

-bash: hadoop-daemon.sh:命令找不到

hduser@banana01 ~ $ cd /opt/hadoop/

hduser@banana01 /opt/hadoop $ sbin/hadoop-daemon.sh start datanode

看起來是沒有問題了,在做個最後的檢查~

[hduser@master01 ~]$ hadoop dfsadmin -report

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

15/07/02 17:28:05 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Configured Capacity: 52844687360 (49.22 GB)

Present Capacity: 47795707904 (44.51 GB)

DFS Remaining: 47795683328 (44.51 GB)

DFS Used: 24576 (24 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Live datanodes (1): 確認任無達成!

Name: 192.168.70.103:50010 (slave02)

Hostname: slave02

Decommission Status : Normal

Configured Capacity: 52844687360 (49.22 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 5048979456 (4.70 GB)

DFS Remaining: 47795683328 (44.51 GB)

DFS Used%: 0.00%

DFS Remaining%: 90.45%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Jul 02 17:28:05 CST 2015

[參考資料]

what is best way to start and stop hadoop ecosystem?http://stackoverflow.com/questions/17569423/what-is-best-way-to-start-and-stop-hadoop-ecosystem

hadoop启动之“hadoop-daemon.sh”详解http://blog.csdn.net/sinoyang/article/details/8021296

2015年6月30日 星期二

2015年6月23日 星期二

[Debian] JAVA JDK在Debian系統的位置 + Hadoop&Spark設定

最近要在裝有Debian系統的Banana Pi安裝Hadoop & Spark

從原本裝在CentOS的master複製過去後發現JAVA的路徑不正確

按照習慣的邏輯卻完全找不到究竟在哪裡,於是費勁千辛萬苦終於找到了

/usr/lib/jvm/jdk-7-oracle-armhf

然後再到以下檔案改Hadoop的參數~

hduser@banana01 ~ $ sudo vi /etc/profile

hduser@banana01 ~ $ vi /home/hduser/.bashrc

hduser@banana01 ~ $ vi /opt/hadoop/libexec/hadoop-config.sh

hduser@banana01 ~ $ vi /opt/hadoop/etc/hadoop/hadoop-env.sh

hduser@banana01 ~ $ vi /opt/hadoop/etc/hadoop/yarn-env.sh

原本大概是長這樣

export JAVA_HOME=/usr/java/jdk1.7.0_65

Banana Pi要改成這樣~

export JAVA_HOME=/usr/lib/jvm/jdk-7-oracle-armhf

別忘了給Hadoop&Spark權限

sudo chown -R hduser:hadoop /opt/hadoop

sudo chown -R hduser:hadoop /opt/spark

如果還有第234....多台Pi要加入cluster

將系統燒成映像檔,再燒入到其他張SD卡再做以下設定即可

各別將它們設定IP

sudo nano /etc/network/interfaces

設定hostname (Banana02)

sudo nano /etc/hostname

最後別忘了給Hadoop&Spark權限

sudo chown -R hduser:hadoop /opt/hadoop

sudo chown -R hduser:hadoop /opt/spark

改完就可以啟動Hadoop&Spark囉!

2015年6月15日 星期一

[Linux] CentOS 了解硬體資訊的指令

了解CPU資訊

cat /proc/cpuinfo

CPU有幾核呢~

cat /proc/cpuinfo|grep "model name"|wc -l

了解memory資訊

cat /proc/meminfo

memory總容量是多少呢~

cat /proc/meminfo |grep "Total"

(持續更新中)

2015年6月7日 星期日

[Linux] CentOS 更改系統日期、時間的指令

查看系統時間

date

修改系統時間

date MMDDhhmmYYYY

MM: two digit month number

DD: two digit date

hh: two digit hour (24 hour system)

mm: two digit minute

YYYY: four digit of year

[hduser@master01 spark]$ date

日 6月 7 00:05:59 CST 2015

[hduser@master01 spark]$ date 060716342015

日 6月 7 16:34:00 CST 2015

2015年6月6日 星期六

[Big Data] Dataset 相關資源

Wiki

http://dumps.wikimedia.org/enwiki/YAHOO!

http://webscope.sandbox.yahoo.com/catalog.php?datatype=gBig Data Bench的整理

http://prof.ict.ac.cn/BigDataBench/Quora的整理

2015年5月17日 星期日

Cluster監控的tool (持續更新中)

Sematext - SPM

我覺得它的UI非常好看,但是要收費

ambari包含了Ganglia & Nagios

Installing a Hadoop Cluster with three Commands

Ambari (the graphical monitoring and management environment for Hadoop)

ambari安裝經驗分享

使用Ambari快速部署Hadoop大数据环境

http://www.cnblogs.com/scotoma/archive/2013/05/18/3085248.htmlGanglia介紹

http://www.ascc.sinica.edu.tw/iascc/articals.php?_section=2.4&_op=?articalID:5134

RPi-Monitor

專門監控Raspberry Pi

- CPU Loads

- Network

- Disk Boot

- Disk Root

- Swap

- Memory

- Uptime

- Temperature

2015/05/17

我要尋找監控Hadoop和Spark效能以及cluster功率消耗的tool

目前還沒找到最理想的解決方法

[Paper Note] Raspberry Pi相關的paper

Heterogeneity: The Key to Achieve Power-Proportional Computing

da Costa, G. ; IRIT, Univ. de Toulouse, Toulouse, France

The Smart 2020 report on low carbon economy in the information age shows that 2% of the global CO2footprint will come from ICT in 2020. Out of these, 18% will be caused by data-centers, while 45% will come from personal computers. Classical research to reduce this footprint usually focuses on new consolidation techniques for global data-centers. In reality, personal computers and private computing infrastructures are here to stay. They are subject to irregular workload, and are usually largely under-loaded. Most of these computers waste tremendous amount of energy as nearly half of their maximum power consumption comes from simply being switched on. The ideal situation would be to use proportional computers that use nearly 0W when lightly loaded. This article shows the gains of using a perfectly proportional hardware on different type of data-centers: 50% gains for the servers used during 98 World Cup, 20% to the already optimized Google servers. Gains would attain up to 80% for personal computers. As such perfect hardware still does not exist, a real platform composed of Intel I7, Intel Atom and Raspberry Pi is evaluated. Using this infrastructure, gains are of 20% for the World Cup data-center, 5% for Google data-centers and up to 60% for personal computers.

這篇paper有拿intel的處理器和Pi作效能上的比較,可以做為異質環境比較的參考

Published in:

Cluster, Cloud and Grid Computing (CCGrid), 2013 13th IEEE/ACM International Symposium onDate of Conference:

13-16 May 2013Affordable and Energy-Efficient Cloud Computing Clusters: The Bolzano Raspberry Pi Cloud Cluster Experiment

Abrahamsson, P. ; Fac. of Comput. Sci., Free Univ. of Bozen-Bolzano, Bolzano, Italy ; Helmer, S. ; Phaphoom, N. ; Nicolodi, L.

We present our ongoing work building a Raspberry Pi cluster consisting of 300 nodes. The unique characteristics of this single board computer pose several challenges, but also offer a number of interesting opportunities. On the one hand, a single Raspberry Pi can be purchased cheaply and has a low power consumption, which makes it possible to create an affordable and energy-efficient cluster. On the other hand, it lacks in computing power, which makes it difficult to run computationally intensive software on it. Nevertheless, by combining a large number of Raspberries into a cluster, this drawback can be (partially) offset. Here we report on the first important steps of creating our cluster: how to set up and configure the hardware and the system software, and how to monitor and maintain the system. We also discuss potential use cases for our cluster, the two most important being an inexpensive and green test bed for cloud computing research and a robust and mobile data center for operating in adverse environments.

Published in:

Cloud Computing Technology and Science (CloudCom), 2013 IEEE 5th International Conference on (Volume:2 )Date of Conference:

2-5 Dec. 2013Technical development and socioeconomic implications of the Raspberry Pi as a learning tool in developing countries

Ali, M. ; Sch. of Eng., Univ. of Warwick, Coventry, UK ; Vlaskamp, J.H.A. ; Eddin, N.N. ; Falconer, B.

The recent development of the Raspberry Pi mini computer has provided new opportunities to enhance tools for education. The low cost means that it could be a viable option to develop solutions for education sectors in developing countries. This study describes the design, development and manufacture of a prototype solution for educational use within schools in Uganda whilst considering the social implications of implementing such solutions. This study aims to show the potential for providing an educational tool capable of teaching science, engineering and computing in the developing world. During the design and manufacture of the prototype, software and hardware were developed as well as testing performed to define the performance and limitation of the technology. This study showed that it is possible to develop a viable modular based computer systems for educational and teaching purposes. In addition to science, engineering and computing; this study considers the socioeconomic implications of introducing the EPi within developing countries. From a sociological perspective, it is shown that the success of EPi is dependant on understanding the social context, therefore a next phase implementation strategy is proposed.

Published in:

Computer Science and Electronic Engineering Conference (CEEC), 2013 5thDate of Conference:

17-18 Sept. 2013Raspberry PI Hadoop Cluster

安裝教學的blog~

2015年5月5日 星期二

[Hadoop] Browse the filesystem無法連結

點選Browse the filesystem出現錯誤無法連結

[ Solution 1] 在master01執行

cd /opt/hadoop/etc/hadoop

vi hdfs-site.xml

在hdfs-site.xml中加入以下,結果無效

<property>

<name>dfs.datanode.http.address</name>

<value>10.0.0.234:50075</value>

</property>

[ Solution 2 ] 在本機端執行

cd /etc

sudo vi hosts

在檔案的最後面加入(記得換成自己的ip喲),就可以瀏覽了

192.168.70.101 master01

192.168.70.102 slave01

192.168.70.103 slave02

[參考資料]

http://www.cnblogs.com/hzmark/p/hadoop_browsethefilesystem.html

http://kurthung1224.pixnet.net/blog/post/170147913

2015年5月4日 星期一

[Spark] Word count 練習

先到spark資料夾

cd /opt/spark/

開啟spark-shell

sbin/start-all.sh

開啟spark-shell

bin/spark-shell

建立path到我們要讀的檔案

val path = "/in/123.txt"

把檔案讀進去,sc是SparkContext的縮寫

val file = sc.textFile(path)

file變成了一個RDD,要用collect指令看RDD裡的東西

file.collect

val line1 = file.flatMap(_.split(" "))

line1.collect

val line2 = line1.filter(_ != "")

line2.collect

val line3 = line2.map(s=> (s,1))

line3.collect

val line4 = line3.reduceByKey(_ + _)

line4.collect

line4.take(10)

line4.take(10).foreach(println)

官網一行指令

val wordCounts = file.flatMap(line => line.split(" ")).map(word => (word, 1)).reduceByKey((a, b) => a + b)

wordCounts.collect()

來看看執行狀況

http://[node IP]:4040/jobs/

[參考資料]

https://spark.apache.org/docs/latest/quick-start.html

http://kurthung1224.pixnet.net/blog/post/275207950

cd /opt/spark/

開啟spark-shell

sbin/start-all.sh

開啟spark-shell

bin/spark-shell

建立path到我們要讀的檔案

val path = "/in/123.txt"

把檔案讀進去,sc是SparkContext的縮寫

val file = sc.textFile(path)

file變成了一個RDD,要用collect指令看RDD裡的東西

file.collect

val line1 = file.flatMap(_.split(" "))

line1.collect

val line2 = line1.filter(_ != "")

line2.collect

val line3 = line2.map(s=> (s,1))

line3.collect

val line4 = line3.reduceByKey(_ + _)

line4.collect

line4.take(10)

line4.take(10).foreach(println)

官網一行指令

val wordCounts = file.flatMap(line => line.split(" ")).map(word => (word, 1)).reduceByKey((a, b) => a + b)

wordCounts.collect()

來看看執行狀況

http://[node IP]:4040/jobs/

[參考資料]

https://spark.apache.org/docs/latest/quick-start.html

http://kurthung1224.pixnet.net/blog/post/275207950

[Hadoop] Word count 範例實做教學

Hadoop on cloudera quickstart vm test example 01 wordcount

mkdir temp

cd temp

ls -ltr

echo "this is huiming and you can call me juiming or killniu i am good at statistical modeling and data analysis" > wordcount.txt

hdfs dfs -mkdir /user/cloudera/input

hdfs dfs -ls /user/cloudera/input

hdfs dfs -put /home/cloudera/temp/wordcount.txt /user/cloudera/input

hdfs dfs -ls /user/cloudera/input

應該會出現剛剛創造的wordcount.txt

ls -ltr /usr/lib/hadoop-mapreduce/

hadoop jar /usr/lib/hadoop-mapreduce/hadoop-mapreduce-example.jar

hadoop jar /usr/lib/hadoop-mapreduce/hadoop-mapreduce-example.jar wordcount /user/cloudera/input/wordcount.txt /user/cloudera/output

hdfs dfs -ls /user/cloudera/output

hdfs dfs -cat /user/cloudera/output/part-r-00000

最後就會跑出word count囉

2015年5月2日 星期六

[Mac] 在OS X安裝wget

下載最新的wget

curl -O http://ftp.gnu.org/gnu/wget/wget-1.15.tar.gz

解壓縮

tar -xzf wget-1.15.tar.gz

到目錄

cd wget-1.15

偵測編譯環境

./configure --with-ssl=openssl

這邊如果出現錯誤的話就開啟xcode

再輸入一次相同的指令,即可成功

編譯程式

make

安裝程式

sudo make install

確認是否安裝成功

wget --help

清理乾淨

cd .. && rm -rf wget*

2015年4月12日 星期日

[Spark] 最近研究Spark的一些resource

Lightning-fast cluster computing

Lightning-fast cluster computinghttp://spark.apache.org/documentation.html

官方網站總是資料最齊全的~

http://spark-summit.org/2014#videos

Spark Summit 2014 brought the Apache Spark community together on June 30- July 2, 2014 at the The Westin St. Francis in San Francisco. It featured production users of Spark, Shark, Spark Streaming and related projects.

完整的slides和vedio都可以免費觀看下載 太棒拉!!!!

2013

http://ampcamp.berkeley.edu/3/

2014

http://ampcamp.berkeley.edu/4/

Databricks Spark Reference Applications

英文版電子書~

Spark 編程指南繁體中文版

中文電子書,可以完整下載唷!!!

學長的部落格~同步學習中

http://kurthung1224.pixnet.net/blog/post/270485866

學校老師的網站

https://bigdataanalytics2014.wordpress.com/

2015年4月6日 星期一

[Spark] 在Ubuntu-14.04上安裝Spark

我在VirtualBox上實做參考的文章,寫得很詳細

sbt/sbt assembly

Install Apache Spark on Ubuntu-14.04

Building and running Spark 1.0 on Ubuntu

sbt/sbt assembly

在執行此指令時,發生了一些問題

java.io.IOException - Cannot run program "git": java.io.IOException: error=2

java.io.IOException - Cannot run program "git": java.io.IOException: error=2

解決方式為退出,並且安裝git

apt-get install git

參考

https://confluence.atlassian.com/pages/viewpage.action?pageId=297672065

apt-get install git

參考

https://confluence.atlassian.com/pages/viewpage.action?pageId=297672065

2015年3月29日 星期日

[Paper Note] 正在讀Spark相關的paper

正在讀Spark偏系統面的paper

Jakovits, P. ; Inst. of Comput. Sci., Univ. of Tartu, Tartu, Estonia ; Srirama, S.N.

Tao Jiang ; SKL Comput. Archit., ICT, Beijing, China ; Qianlong Zhang ; Rui Hou ; Lin Chai

more authors

Spark-based anomaly detection over multi-source VMware performance data in real-time

Solaimani, M. ; Dept. of Comput. Sci., Univ. of Texas at Dallas, Richardson, TX, USA ; Iftekhar, M. ; Khan, L. ; Thuraisingham, B.

more authors

more authors

Anomaly detection refers to identifying the patterns in data that deviate from expected behavior. These non-conforming patterns are often termed as outliers, malwares, anomalies or exceptions in different application domains. This paper presents a novel, generic real-time distributed anomaly detection framework for multi-source stream data. As a case study, we have decided to detect anomaly for multi-source VMware-based cloud data center. The framework monitors VMware performance stream data (e.g., CPU load, memory usage, etc.) continuously. It collects these data simultaneously from all the VMwares connected to the network. It notifies the resource manager to reschedule its resources dynamically when it identifies any abnormal behavior of its collected data. We have used Apache Spark, a distributed framework for processing performance stream data and making prediction without any delay. Spark is chosen over a traditional distributed framework (e.g.,Hadoop and MapReduce, Mahout, etc.) that is not ideal for stream data processing. We have implemented a flat incremental clustering algorithm to model the benign characteristics in our distributed Spark based framework. We have compared the average processing latency of a tuple during clustering and prediction in Spark with Storm, another distributed framework for stream data processing. We experimentally find that Spark processes a tuple much quicker than Storm on average.

Published in:

Computational Intelligence in Cyber Security (CICS), 2014 IEEE Symposium onDate of Conference:

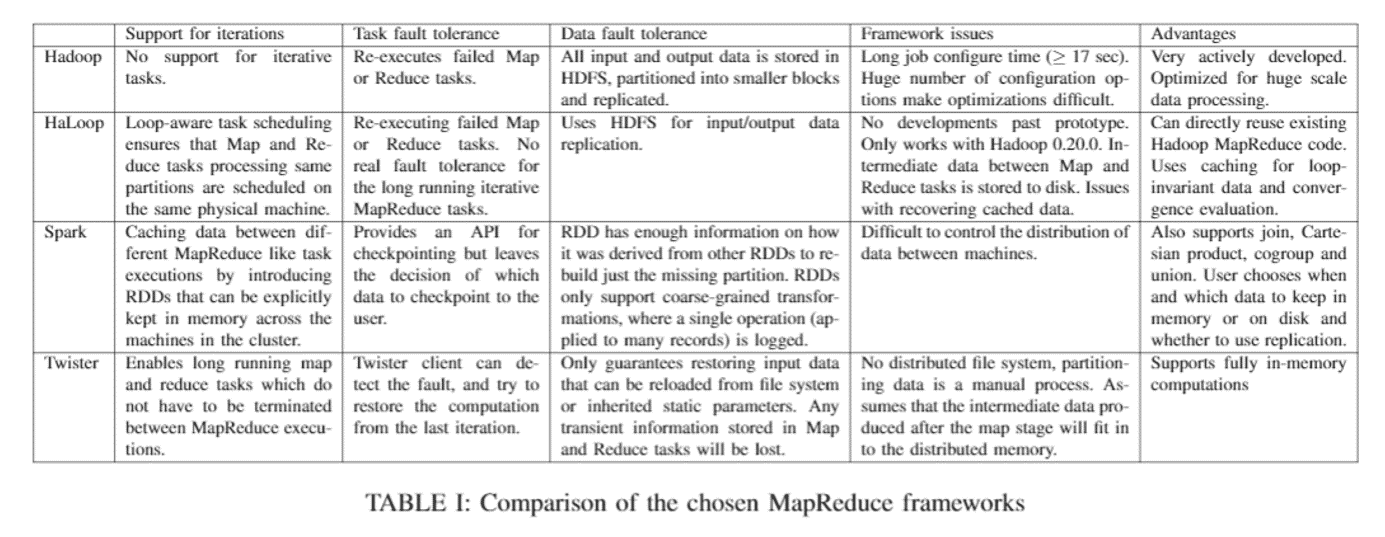

9-12 Dec. 2014Evaluating MapReduce frameworks for iterative Scientific Computing applications

http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=6903690&searchWithin%3Dhadoop%26refinements%3D4291944246%2C4291944822%26sortType%3Ddesc_p_Citation_Count%26ranges%3D2012_2015_p_Publication_Year%26queryText%3DSPARKJakovits, P. ; Inst. of Comput. Sci., Univ. of Tartu, Tartu, Estonia ; Srirama, S.N.

Scientific Computing deals with solving complex scientific problems by applying resource-hungry computer simulation and modeling tasks on-top of supercomputers, grids and clusters. Typical scientific computing applications can take months to create and debug when applying de facto parallelization solutions like Message Passing Interface (MPI), in which the bulk of the parallelization details have to be handled by the users. Frameworks based on the MapReduce model, like Hadoop, can greatly simplify creating distributed applications by handling most of the parallelization and fault recovery details automatically for the user. However, Hadoop is strictly designed for simple, embarrassingly parallel algorithms and is not suitable for complex and especially iterative algorithms often used in scientific computing. The goal of this work is to analyze alternative MapReduce frameworks to evaluate how well they suit for solving resource hungry scientific computing problems in comparison to the assumed worst (Hadoop MapReduce) and best case (MPI) implementations for iterative algorithms.

Published in:

High Performance Computing & Simulation (HPCS), 2014 International Conference onDate of Conference:

21-25 July 2014Understanding the behavior of in-memory computing workloads

http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=6983036&searchWithin%3Dhadoop%26refinements%3D4291944246%2C4291944822%26sortType%3Ddesc_p_Citation_Count%26ranges%3D2012_2015_p_Publication_Year%26queryText%3DSPARKTao Jiang ; SKL Comput. Archit., ICT, Beijing, China ; Qianlong Zhang ; Rui Hou ; Lin Chai

more authors

The increasing demands of big data applications have led researchers and practitioners to turn to in-memory computing to speed processing. For instance, the Apache Spark framework stores intermediate results in memory to deliver good performance on iterative machine learning and interactive data analysis tasks. To the best of our knowledge, though, little work has been done to understand Spark's architectural and microarchitectural behaviors. Furthermore, although conventional commodity processors have been well optimized for traditional desktops and HPC, their effectiveness for Spark workloads remains to be studied. To shed some light on the effectiveness of conventional generalpurpose processors on Spark workloads, we study their behavior in comparison to those of Hadoop, CloudSuite, SPEC CPU2006, TPC-C, and DesktopCloud. We evaluate the benchmarks on a 17-node Xeon cluster. Our performance results reveal that Spark workloads have significantly different characteristics from Hadoop and traditional HPC benchmarks. At the system level, Spark workloads have good memory bandwidth utilization (up to 50%), stable memory accesses, and high disk IO request frequency (200 per second). At the microarchitectural level, the cache and TLB are effective for Spark workloads, but the L2 cache miss rate is high. We hope this work yields insights for chip and datacenter system designers.

Published in:

Workload Characterization (IISWC), 2014 IEEE International Symposium onDate of Conference:

26-28 Oct. 2014A Big Data Architecture for Large Scale Security Monitoring

Marchal, S. ; SnT, Univ. of Luxembourg, Luxembourg, Luxembourg ; Xiuyan Jiang ; State, R. ; Engel, T.

Network traffic is a rich source of information for security monitoring. However the increasing volume of data to treat raises issues, rendering holistic analysis of network traffic difficult. In this paper we propose a solution to cope with the tremendous amount of data to analyse for security monitoring perspectives. We introduce an architecture dedicated to security monitoring of local enterprise networks. The application domain of such a system is mainly network intrusion detection and prevention, but can be used as well for forensic analysis. This architecture integrates two systems, one dedicated to scalable distributed data storage and management and the other dedicated to data exploitation. DNS data, NetFlow records, HTTP traffic and honeypot data are mined and correlated in a distributed system that leverages state of the art big data solution. Data correlation schemes are proposed and their performance are evaluated against several well-known big data framework including Hadoop and Spark.

Published in:

Big Data (BigData Congress), 2014 IEEE International Congress onDate of Conference:

June 27 2014-July 2 20142015年3月27日 星期五

[Hadoop] 開啟或關閉hadoop

開啟或關閉hadoop

把hadoop關掉(master)

stop-all.sh

刪掉hadoop暫存檔

\rm -r /opt/hadoop/tmp

登入slave01

ssh slave01

\rm -r /opt/hadoop/tmp

exit //離開slave01

登入slave02

ssh slave02

\rm -r /opt/hadoop/tmp

exit //離開slave02回到master

把namenode格式化,讓master重新認識namenode

hadoop namenode -format

啟動hadoop

start-all.sh

確認有沒有開啟hadoop

http://[master01 IP]:50070/

確認MapReduce的狀況

http://[master01 IP]:8088/

jps //類似工作管理員,看有哪些東西在run

把hadoop關掉(master)

stop-all.sh

刪掉hadoop暫存檔

\rm -r /opt/hadoop/tmp

登入slave01

ssh slave01

\rm -r /opt/hadoop/tmp

exit //離開slave01

登入slave02

ssh slave02

\rm -r /opt/hadoop/tmp

exit //離開slave02回到master

把namenode格式化,讓master重新認識namenode

hadoop namenode -format

啟動hadoop

start-all.sh

確認有沒有開啟hadoop

http://[master01 IP]:50070/

確認MapReduce的狀況

http://[master01 IP]:8088/

jps //類似工作管理員,看有哪些東西在run

hadoop dfsadmin -report //查看群集狀態

[參考資料]

Hadoop 2.2.0 Single Cluster 安裝 (二)(CentOS 6.4 x64)

http://shaurong.blogspot.tw/2013/11/hadoop-220-single-cluster-centos-64-x64_7.html

CentOS 安装 hadoop(伪分布模式)

http://zhans52.iteye.com/blog/1102649

[參考資料]

Hadoop 2.2.0 Single Cluster 安裝 (二)(CentOS 6.4 x64)

http://shaurong.blogspot.tw/2013/11/hadoop-220-single-cluster-centos-64-x64_7.html

CentOS 安装 hadoop(伪分布模式)

http://zhans52.iteye.com/blog/1102649

訂閱:

文章 (Atom)